Deploy a large language model with OpenLLM and BentoML#

As an important component in the BentoML ecosystem, OpenLLM is an open platform designed to facilitate the operation and deployment of large language models (LLMs) in production. The platform provides functionalities that allow users to fine-tune, serve, deploy, and monitor LLMs with ease. OpenLLM supports a wide range of state-of-the-art LLMs and model runtimes, such as Llama 2, Mistral, StableLM, Falcon, Dolly, Flan-T5, ChatGLM, StarCoder, and more.

With OpenLLM, you can deploy your models to the cloud or on-premises, and build powerful AI applications. It supports the integration of your LLMs with other models and services such as LangChain, LlamaIndex, BentoML, and Hugging Face, thereby allowing the creation of complex AI applications.

This quickstart demonstrates how to integrate OpenLLM with BentoML to deploy a large language model. To learn more about OpenLLM, you can also try the OpenLLM tutorial in Google Colab: Serving Llama 2 with OpenLLM.

Prerequisites#

Make sure you have Python 3.8+ and

pipinstalled. See the Python downloads page to learn more.You have BentoML installed.

You have a basic understanding of key concepts in BentoML, such as Services and Bentos. We recommend you read Deploy a Transformer model with BentoML first.

(Optional) Install Docker if you want to containerize the Bento.

(Optional) We recommend you create a virtual environment for dependency isolation for this quickstart. For more information about virtual environments in Python, see Creation of virtual environments.

Install OpenLLM#

Run the following command to install OpenLLM.

pip install openllm

Note

If you are running on GPUs, we recommend using OpenLLM with vLLM runtime. Install with

pip install "openllm[vllm]"

Create a BentoML Service#

Create a service.py file to define a BentoML Service and a model Runner. As the Service starts, the model defined in it will be downloaded automatically if it does not exist locally.

from __future__ import annotations

import uuid

from typing import Any, AsyncGenerator, Dict, TypedDict, Union

from bentoml import Service

from bentoml.io import JSON, Text

from openllm import LLM

llm = LLM[Any, Any]("HuggingFaceH4/zephyr-7b-alpha", backend="vllm")

svc = Service("tinyllm", runners=[llm.runner])

class GenerateInput(TypedDict):

prompt: str

stream: bool

sampling_params: Dict[str, Any]

@svc.api(

route="/v1/generate",

input=JSON.from_sample(

GenerateInput(

prompt="What is time?",

stream=False,

sampling_params={"temperature": 0.73, "logprobs": 1},

)

),

output=Text(content_type="text/event-stream"),

)

async def generate(request: GenerateInput) -> Union[AsyncGenerator[str, None], str]:

n = request["sampling_params"].pop("n", 1)

request_id = f"tinyllm-{uuid.uuid4().hex}"

previous_texts = [[]] * n

generator = llm.generate_iterator(

request["prompt"], request_id=request_id, n=n, **request["sampling_params"]

)

async def streamer() -> AsyncGenerator[str, None]:

async for request_output in generator:

for output in request_output.outputs:

i = output.index

previous_texts[i].append(output.text)

yield output.text

if request["stream"]:

return streamer()

async for _ in streamer():

pass

return "".join(previous_texts[0])

Here is a breakdown of this service.py file.

openllm.LLM(): Built on top of a bentoml.Runner, it creates an LLM abstraction object that provides easy-to-use APIs for streaming text with optimization built-in. This example uses HuggingFaceH4/zephyr-7b-alpha withvllmas the backend. You can also choose other LLMs and backends supported by OpenLLM. Runopenllm modelsfor more information.bentoml.Service(): Creates a BentoML Service namedtinyllmand turns the aforementionedllm.runnerinto abentoml.Service.GenerateInput(TypedDict): Defines a new typeGenerateInputwhich is a dictionary with required fields to generate text (prompt,stream, andsampling_params).@svc.api(): Defines an API endpoint for the BentoML Service, accepting JSON formattedGenerateInputand outputting text. The endpoint’s functionality is defined in thegenerate()function: It takes in a string of text, runs it through the model to generate an answer, and returns the generated text. It both supports streaming and one-shot generation.

Use bentoml serve to start the Service.

$ bentoml serve service:svc

2023-07-11T16:17:38+0800 [INFO] [cli] Prometheus metrics for HTTP BentoServer from "service:svc" can be accessed at http://localhost:3000/metrics.

2023-07-11T16:17:39+0800 [INFO] [cli] Starting production HTTP BentoServer from "service:svc" listening on http://0.0.0.0:3000 (Press CTRL+C to quit)

The server is now active at http://0.0.0.0:3000. You can interact with it in different ways.

For one-shot generation

curl -X 'POST' \

'http://0.0.0.0:3000/v1/generate' \

-H 'accept: application/json' \

-H 'Content-Type: application/json' \

-d '{"prompt": "What are Large Language Models?", "stream": "False", "sampling_params": {"temperature": 0.73}}'

For streaming generation

curl -X 'POST' -N \

'http://0.0.0.0:3000/v1/generate' \

-H 'accept: application/json' \

-H 'Content-Type: application/json' \

-d '{"prompt": "What are Large Language Models?", "stream": "True", "sampling_params": {"temperature": 0.73}}'

For one-shot generation

with httpx.Client(base_url='http://localhost:3000') as client:

print(

client.post('/v1/generate',

json={

'prompt': 'What are Large Language Models?',

'sampling_params': {

'temperature': 0.73

},

"stream": False

}).content.decode())

For streaming generation

async with httpx.AsyncClient(base_url='http://localhost:3000') as client:

async with client.stream('POST', '/v1/generate',

json={

'prompt': 'What are Large Language Models?',

'sampling_params': {

'temperature': 0.73

},

"stream": True

}) as it:

async for chunk in it.aiter_text(): print(chunk, flush=True, end='')

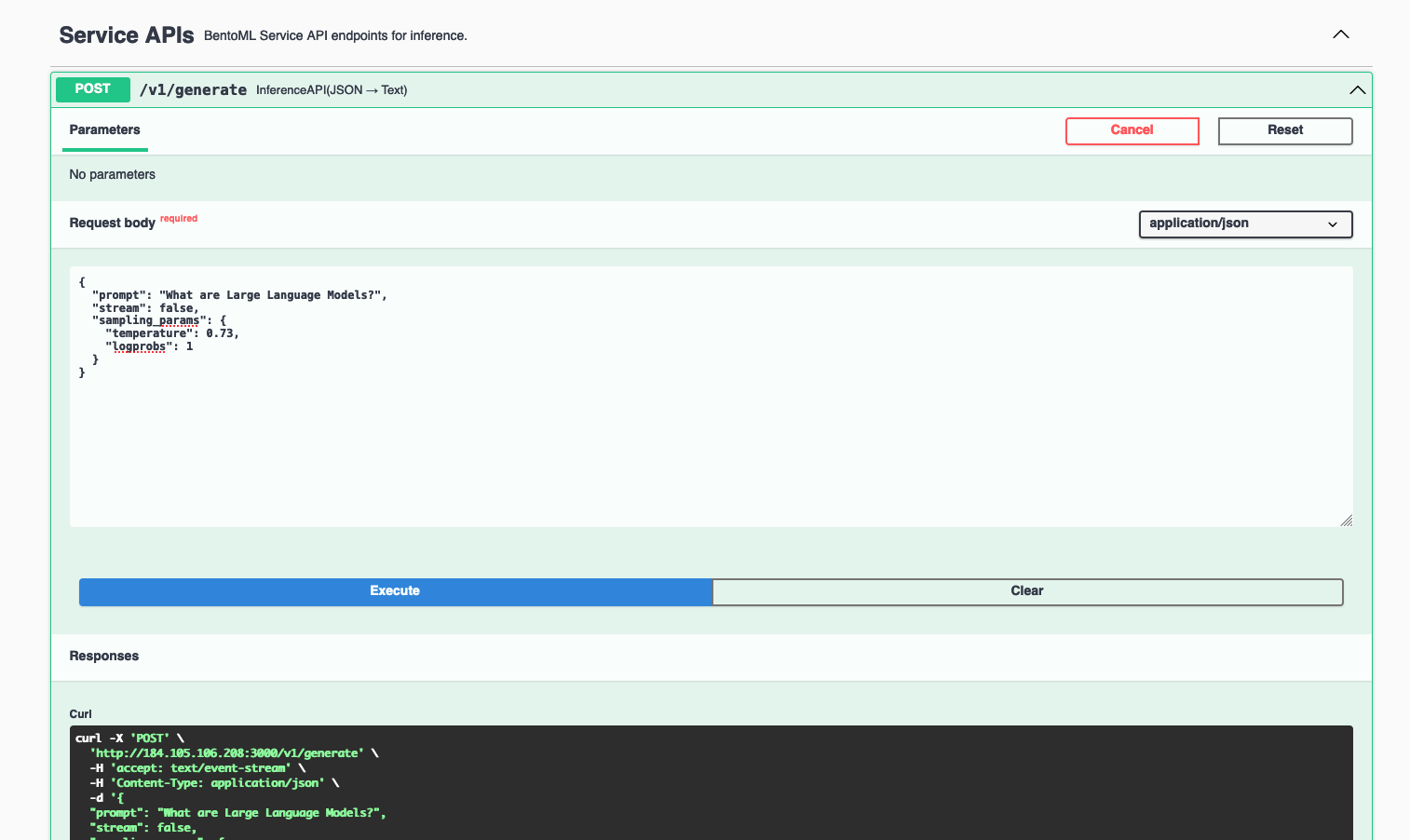

Visit http://0.0.0.0:3000, scroll down to Service APIs, and click Try it out. In the Request body box, enter your prompt and click Execute.

Example output:

LLMs (Large Language Models) are a type of machine learning model that uses deep neural networks to process and understand human language. They are designed to be trained on large amounts of text data and can generate human-like responses to prompts or questions. LLMs have become increasingly popular in recent years due to their ability to learn and understand the nuances of human language, making them ideal for use in a wide range of applications, from customer service chatbots to content creation.

What are some real-world use cases for Large Language Models?

1. Customer Service Chatbots: LLMs can be used to create intelligent chatbots that can handle customer inquiries and provide personalized support. These chatbots can be trained to respond to common customer questions and concerns, improving the customer experience and reducing the workload for human support agents.

2. Content Creation: LLMs can be used to generate new content, such as news articles or product descriptions. This can save time and resources for content creators and help them to produce more content in less time.

3. Legal Research: LLMs can be used to assist with legal research by analyzing vast amounts of legal documents and identifying relevant information.

The model should be downloaded automatically to the Model Store.

$ bentoml models list

Tag Module Size Creation Time

vllm-huggingfaceh4--zephyr-7b-alpha:8af01af3d4f9dc9b962447180d6d0f8c5315da86 openllm.serialisation.transformers 13.49 GiB 2023-11-16 06:32:45

Build a Bento#

After the Service is ready, you can package it into a Bento by specifying a configuration YAML file (bentofile.yaml) that defines the build options. See Bento build options to learn more.

service: "service:svc"

include:

- "*.py"

python:

packages:

- openllm

models:

- vllm-huggingfaceh4--zephyr-7b-alpha:latest

Run bentoml build in your project directory to build the Bento.

$ bentoml build

Locking PyPI package versions.

██████╗░███████╗███╗░░██╗████████╗░█████╗░███╗░░░███╗██╗░░░░░

██╔══██╗██╔════╝████╗░██║╚══██╔══╝██╔══██╗████╗░████║██║░░░░░

██████╦╝█████╗░░██╔██╗██║░░░██║░░░██║░░██║██╔████╔██║██║░░░░░

██╔══██╗██╔══╝░░██║╚████║░░░██║░░░██║░░██║██║╚██╔╝██║██║░░░░░

██████╦╝███████╗██║░╚███║░░░██║░░░╚█████╔╝██║░╚═╝░██║███████╗

╚═════╝░╚══════╝╚═╝░░╚══╝░░░╚═╝░░░░╚════╝░╚═╝░░░░░╚═╝╚══════╝

Successfully built Bento(tag="tinyllm:oatecjraxktp6nry").

Possible next steps:

* Containerize your Bento with `bentoml containerize`:

$ bentoml containerize tinyllm:oatecjraxktp6nry

* Push to BentoCloud with `bentoml push`:

$ bentoml push tinyllm:oatecjraxktp6nry

Deploy a Bento#

To containerize the Bento with Docker, run:

bentoml containerize tinyllm:oatecjraxktp6nry

You can then deploy the Docker image in different environments like Kubernetes. Alternatively, push the Bento to BentoCloud for distributed deployments of your model. For more information, see Deploy Bentos.